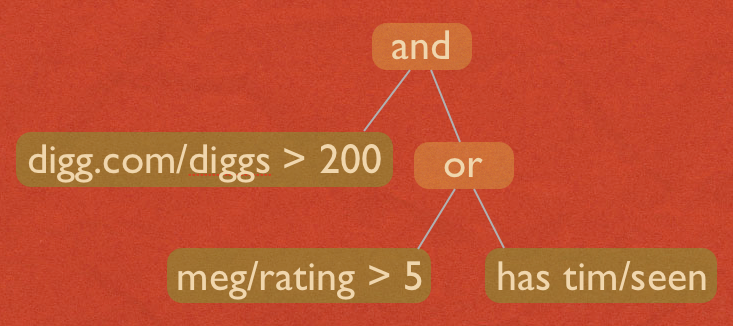

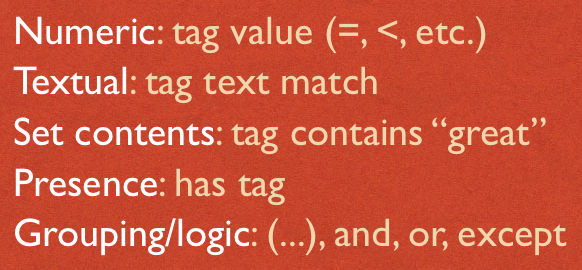

Fluidinfo has a simple query language. If you are familiar with any other query language, you can probably learn the entire Fluidinfo language in a couple of minutes. The image below shows a summary of the whole language. Without going into details, you can immediately tell there’s not much to it. Click on the image to read more. In contrast, SQL is massive. The SQL 2008 standard comes in 9 parts, the second of which is over 1300 pages.

The downside to having such a simple query language is that complicated data retrieval, processing and organization is not done server-side. Applications have to request data in a simpler fashion, process it locally, and make further network requests if they need additional related data.

The strong upside is that a deliberately simple query language permits architectural simplicity. Because query processing is the most complex part of Fluidinfo, it bounds underlying complexity and has a direct influence on overall system implementation and architecture. Whereas a complex query language, such as SQL, makes it difficult to scale, a simple one makes scaling simpler—at least in theory; you still have to build it, of course!

The trick is getting the balance right: design a query language that’s practical and useful for a wide variety of common tasks, but whose simplicity confers important architectural advantages.

Here are a few ways in which the Fluidinfo query language and the resultant architecture give us hope that we’re building something that can grow.

- Complex queries are not possible. You can make a big query in Fluidinfo or a deep query or a query that returns many results, but you can’t make a complex query—I mean the kind of query that can bring an SQL server to its knees. Just for starters, the Fluidinfo query language has no JOIN statement. When a query language is complex, the database is at the mercy of its applications: Applications can submit queries with JOINs that are so complex that the required data cannot reasonably be brought together (JOINed) in order for the selection to proceed.

- All query resolution is simple. In the parse tree of any Fluidinfo query, all the leaves are simple. Each requires either a single lookup in a B-tree (or similar), or a single text match. The result of the processing at a leaf is always a set of object ids. The internal nodes of the query tree only require set operations (union, intersection, difference) on object ids. Below is a fragment of a query parse tree. There’s nothing else.

- Parallelization is trivial. Because the values of Fluidinfo tags are stored separately, as in a column store, leaf queries are always sent in parallel to the independent servers that maintain the tags in question.

- It scales horizontally. Because tag values are stored independently and internal query tree nodes are always simple set operations on object ids, the architecture is easy to scale horizontally. We built (and open-sourced) txAMQP to combine Thrift and AMQP with Twisted to give ourselves transparent messaging-mediated RPC. That means the new servers can be deployed and run services that simply join or create the appropriate AMQP queues, and immediately begin receiving RPC calls. When more tag servers or set operation servers are needed, it is trivial to add them.

- Unused tags can be taken offline. Because tags are stored independently, those that have not been used for some time can have their values serialized and stored in a cheaper medium for the interim. They need not occupy expensive and scarce RAM. When they’re next queried—if ever—they can rapidly be brought back online. This is an architectural advantage that’s mainly made possible by the system design, not the query language simplicity. I’ve included it nevertheless, because this kind of optimization might not be possible in a system with a query language that demanded a more complex underlying data organization.

- It can scale down as well as up. Just as scaling up by adding servers is simple, servers can be taken down during quieter periods. Set operations servers can simply disappear. Tag servers can migrate management of their tags to other servers or just take tags offline – they will be re-animated by another tag server when next needed.

- Adaptive affinity is straightforward. When tags are frequently being queried together, they can be migrated to the same tag server. Then an entire sub-query involving both can be sent to that server and the result, just a set of object ids, flows up through the query tree exactly as it would have had the leaves been processed on separate servers. And when things get too hot, i.e., tags being stored together have created a hotspot, they can be migrated to separate servers.

That’s enough for now. There are other, more detailed, advantages that I’ve omitted for brevity. I’m trying to keep each of these posts down to reasonable size.

Does the query extend to, say “meg/rating > tim/rating” or is this too complex?

Comment by Mat — February 24, 2011 @ 8:40 pm

Hi Mat

At the moment that’s too complex (believe it or not!). But it’s one of the things I think we will end up supporting as it’s so basic and useful. To do it right now, you’d send a query that included “has meg/rating and has tim/rating” and ask to get back those two tags (the /values HTTP call lets you do that), and would have to post-process. That’s ok in many situations, but is obviously not ideal and not great if there’s a ton of data (lots of ratings) but the result set is very small etc. (e.g., in a mobile environment).

Thanks for your interest!

Terry

Comment by terrycojones — February 25, 2011 @ 4:04 pm