In an O’Reilly Radar article titled Getting closer to the web2.0 address book in October 2010, I described how a set of applications might coordinate via shared storage to solve Tim O’Reilly‘s question:

Given that knowledge about who I know is in my phone, in the O’Reilly org chart, and in the set of authors of O’Reilly books, why do I still have to manually approve friend requests from Facebook, LinkedIn, etc.?

In that article I argued that this aspect of what Tim calls the web 2.0 address book should be solved not by an application, or even by applications making API calls to one another, but by a set of applications that communicate asynchronously via shared writable storage. As well as shared storage, also needed are a permissions system, a query language, and conventions used by the cooperating applications. No API calls are needed between applications. The interaction between the cooperating applications takes place entirely via shared storage. API calls are only used to add, retrieve, and query data in the shared storage.

At Gluecon in Denver last week, I gave a talk titled The Real Promise of Cloud Storage in which I argued that shared storage gives two big advantages. The second was that it allows the evolution of “asynchronous data protocols”. The final slides of the presentation showed the series of steps that would be taken to build what Tim was asking for. I wasn’t able to go into the detail of how this would work in the time available. So in this post I’ll give the detail of how this can be implemented using Fluidinfo. The post is long because I want to spell out the steps, the assumptions, the Fluidinfo tag permissions, the queries, the security aspects, and so on.

The business case

I’m going to pretend LinkedIn is the company that would like its users to have more flexibility in approving friend requests. Additional flexibility could include personalized friend resolvers (for example based on the O’Reilly org chart) and also applications that could assist in various way, and if desired even automate some responses (e.g., people you have called more than twice in the last month, or people you have put on a particular list on Twitter).

LinkedIn is a good candidate because they have traditionally placed less emphasis on being a burgeoning social network, and more on link quality, by requiring a higher level of proof to create more meaningful reciprocal connections. That seems to be changing slowly now, though. You used to need to know another user’s email to connect to them, but that has been relaxed over the last couple of years. It’s obvious why LinkedIn would do this: knowing connections between their members is valuable. LinkedIn will now show me suggested people I might know, and let me reach out to connect with them with a single click. No need to go look up email addresses, etc.

I suspect many people have valid unanswered friend connection requests sitting in their LinkedIn inboxes. I do, and I almost never make time to deal with them. Those requests, if accepted, would add value to LinkedIn. The (valid) connection requests that sit unanswered for whatever reason represent unrealized value. So it’s very much in LinkedIn’s interest to help people connect. They should be interested in a solution to Tim’s dilemma, as should Facebook and any social network whose friending mechanism is reciprocal.

Using the shared writable storage of Fluidinfo to solve this problem

Here is how this could be achieved with Fluidinfo.

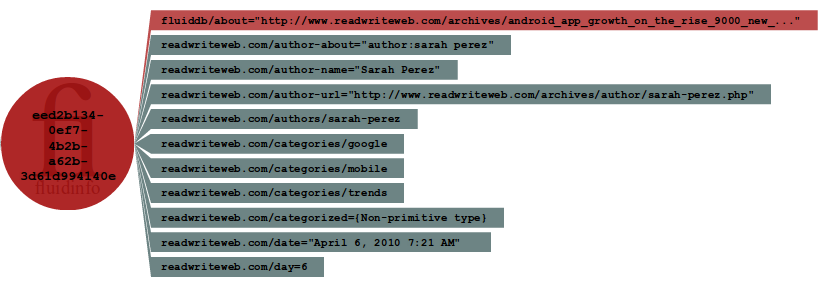

1. LinkedIn announces that it will support personalized friend resolution via Fluidinfo. Users will have control over what kinds of friend resolution they want to enable. LinkedIn will use the linkedin.com user name in Fluidinfo. (Fluidinfo makes it possible to put domain names on data. Every Fluidinfo user name that corresponds to a domain is reserved for its internet domain owner.)

2. Suppose Tim, a LinkedIn user, decides to try the new functionality. LinkedIn directs him to a page where he can choose a Fluidinfo user name (let’s say "tim") and create a Fluidinfo tag called tim/friend-request/linkedin. Tim sets the permissions so that the Fluidinfo linkedin.com user can both add and delete the tag on Fluidinfo objects. He then tells LinkedIn his Fluidinfo user name. This is necessary because LinkedIn will later create data in Fluidinfo on Tim’s behalf. Note that LinkedIn only knows Tim’s Fluidinfo user name, not his password.

3. Suppose a user Alice now tells LinkedIn that she is a friend of Tim’s. LinkedIn creates a new object in Fluidinfo and puts an instance of tim/friend-request/linkedin onto it with a value (perhaps in JSON format) holding the information Alice is willing to supply as evidence that she knows Tim. This could include things like her Twitter user name, her phone number, the name of a company she used to work at with Tim, etc. LinkedIn also adds a random request identifier (more on which below).

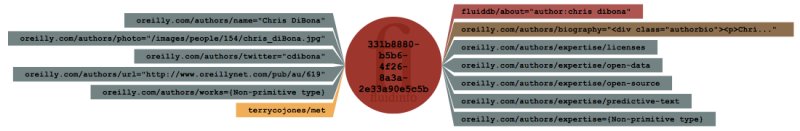

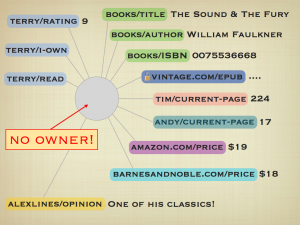

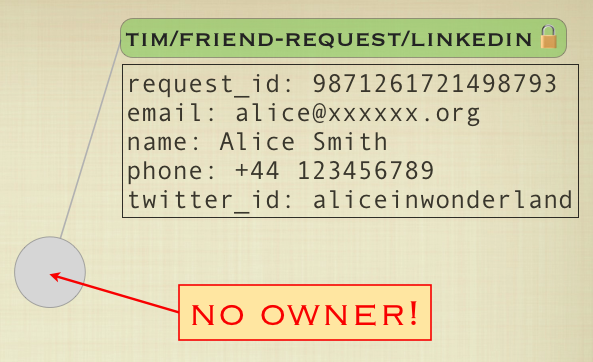

The object in Fluidinfo looks like this (the tag value is in the rectangle under the tag name):

Note that the object does not have an owner. That’s a fundamental feature of Fluidinfo: objects don’t have owners. Instead, tags on objects have permissions. That’s crucial in what follows, because it allows any application to add more information to the object representing the friend request.

A friend resolver based on Twitter friends: TwitResolve

4. Let’s suppose a keen-eyed developer notices the LinkedIn announcement and decides to write a Twitter-based friend resolver called TwitResolve. The developer grabs the internet domain twitresolve.com and then gets the twitresolve.com user name on Fluidinfo (as the domain owner, only they can do this, as above). TwitResolve will use two tags in Fluidinfo, twitresolve.com/accepted and twitresolve.com/examined. It sets the permissions on the tag twitresolve.com/accepted so that only the Fluidinfo user called linkedin.com can read it.

5. Let’s suppose Tim hears of TwitResolve and decides to use it. The TwitResolve sign-up page asks Tim to change the permissions on the Fluidinfo tag tim/friend-request/linkedin so that the Fluidinfo user twitresolve.com can read the tag. (The setting of the permission could be done in various ways, which we’ll ignore for now.) By giving TwitResolve permission to read the tag that LinkedIn puts onto objects, Tim enables TwitResolve’s participation in helping to resolve friend requests for him. TwitResolve also asks Tim for his Twitter username (and gets Tim to prove that).

6. TwitResolve runs a process periodically that asks Fluidinfo for the outstanding requests for Tim that it hasn’t already examined. The relevant query in Fluidinfo’s query language is has tim/friend-request/linkedin except has twitresolve.com/examined.

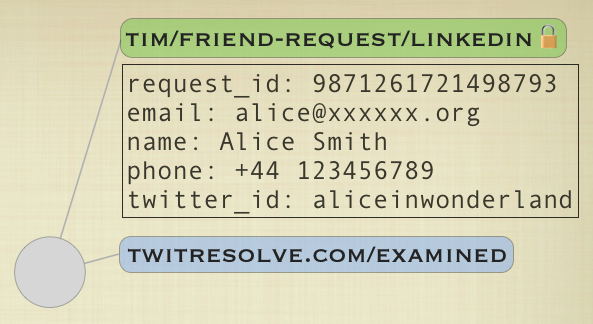

7. In our case, this query finds the object shown above and TwitResolve examines the value of the tim/friend-request/linkedin tag. It talks to Twitter to discover whether Tim is following Alice (it can do this because Alice’s Twitter user name is in the tag value, and Tim provided his Twitter user name to TwitResolve). Suppose Tim is not following Alice. TwitResolve cannot conclude anything, so it simply puts a twitresolve.com/examined tag (with no value) onto the request object in order to avoid re-examining this request. At this point the Fluidinfo object looks as below and the friend request is still unfulfilled.

A friend resolver based on the O’Reilly org chart

8. News gets out quickly to the digerati and someone inside O’Reilly decides to write a resolver based on the O’Reilly org chart. They create two tags, similar to those created by TwitResolve, called oreilly.com/accepted and oreilly.com/examined, again setting the permissions on the former so that only the Fluidinfo user called linkedin.com can read that tag. Tim decides to use the new resolver, so he gives the oreilly.com user permission to read the tim/friend-request/linkedin tag.

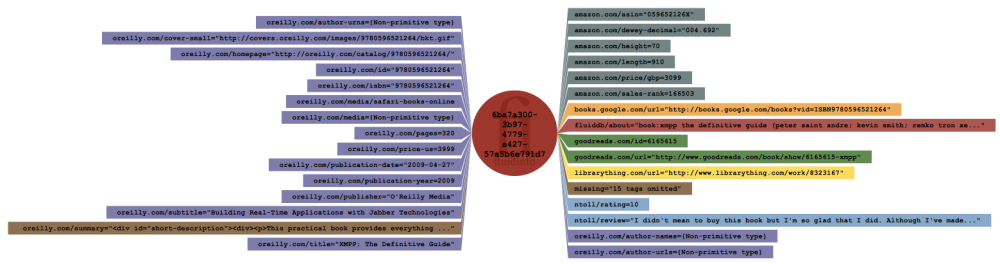

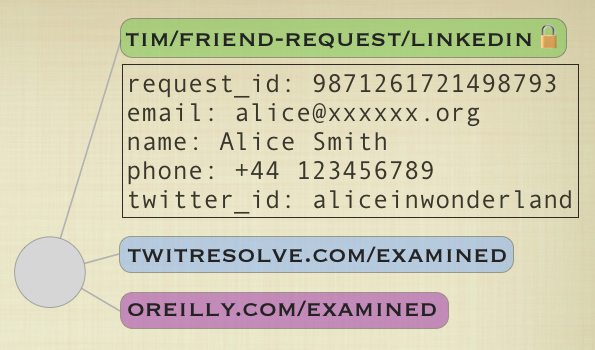

9. The O’Reilly resolver might be started by cron each night. When it runs, it sends a Fluidinfo query, just like TwitResolve does. Suppose Alice does not work at O’Reilly. The details in the request cannot confirm her as a friend of Tim’s. Just as with TwitResolve, the O’Reilly program puts an oreilly.com/examined tag onto the object, and we arrive at the following:

A friend resolver based on Amazon books

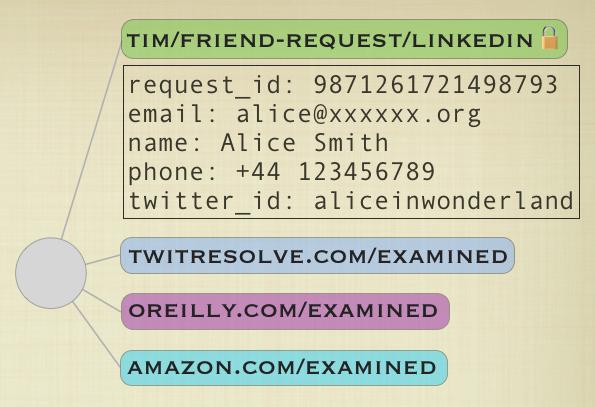

10. To give a third example (to fulfill Tim’s requirements), another resolver could be written based on authors of books. Suppose it’s written by someone at Amazon. (The details of how this might successfully match Alice to Tim aren’t important here.) Suppose it is also unsuccessful in matching this friend request. It adds an amazon.com/examined tag to the object:

An iPhone friend resolver: iPhoneFriender

11. Next, someone decides to write a resolver that can match based on phone numbers, called iPhoneFriender. They get the iphonefriender.com domain name and user name in Fluidinfo. They will use the tags (iphonefriender.com/accepted and iphonefriender.com/examined), and set the permissions on the iphonefriender.com/accepted tag so that only the linkedin.com Fluidinfo user can read it.

When the iPhoneFriender application runs, it sends the Fluidinfo query has tim/friend-request/linkedin except has iphonefriender.com/examined and finds our object. It reads the request details. Suppose it finds Alice’s phone number in Tim’s phone address book, and can see whether Tim has called Alice or vice-versa, whether Tim has chosen not to accept her calls, etc. iPhoneFriender has preference settings to allow Tim to specify what kinds of requests it will ask him to confirm, and which to automatically accept. Suppose that one way or another the friend request is accepted.

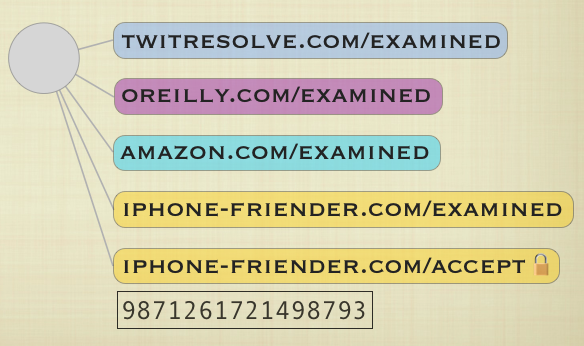

12. The iPhoneFriender application needs to tell LinkedIn that Alice is recognized as a friend of Tim’s. So it puts an iphonefriender.com/accepted tag with value 9871261721498793 onto the original object. This is the unique request_id value from the original request. Remember that the iphonefriender.com/accepted tag has its permissions set so that only linkedin.com can read it. Hiding the identifier in this way prevents rogue applications from falsely claiming to have satisfied friend requests. Only an application that Tim has given permission to read the tim/friend-request/linkedin tag to could know the request identifier. Only LinkedIn can read the identifier value out of the iphonefriender.com/accepted tag attached by iPhoneFriender. iPhoneFriender also adds an iphonefriender.com/examined tag to the object to avoid repeating work.

The Fluidinfo object now looks as follows (tag values, when present, are shown in rectangles under the tag names):

Friendship, requited!

13. Later, LinkedIn sends Fluidinfo the query has tim/friend-request/linkedin. The search matches our object and LinkedIn then gets a list of its tags. For tags named */accepted (where * matches any user name) it tries to read the value of the tag. If it finds a tag whose value matches the identifier in the request tag on the object, LinkedIn adds the friendship link inside its own site. It also deletes the tim/friend-request/linkedin tag from the object, resulting in:

At this point we’re basically done. There are many possible variations on the above. For example, using timestamps to retry friend resolution, using timestamps to only examine recent requests, using separate tags to hold pieces of friend request information, using an extra linkedin.com tag to reduce the number of queries it must make to find outstanding requests, etc. Applications are not forced to use an examined tag to avoid repeating work; if they do they can name it whatever they please. It’s also easy to imagine more exotic resolvers, e.g., Tim giving people a secret random number and looking for that in the request (LinkedIn would have to allow Alice to add it to the request, obviously), etc. Participating applications could also clean up by finding objects with their examined tag but that no longer have a tim/friend-request/linkedin tag, and removing their examined and accepted tags (if present).

Why this is nice

The above dance is nice for several reasons:

- This is an open, convention-based, extensible, and validated application ecosystem. LinkedIn just writes data to Fluidinfo and periodically checks for resolution. In effect it is giving Tim the power to use any application he wants to resolve the request in any way he wants. Participating applications just follow the established tag naming convention. LinkedIn knows that if any application attaches a tag of the form */accepted with value 9871261721498793 to the object, that it must represent a validated acceptance by Tim.

- As a result, anyone can play. An idiosyncratic resolver such as that based on the O’Reilly org chart is as legitimate a contributor as any other. None of the resolvers needed to ask for permission (from LinkedIn) to participate, or needed to be anticipated by LinkedIn.

- There are no API calls between the applications involved. This is significant because APIs have to be designed in advance and you need permission to use them. In our scenario, all communication between applications is done via a data protocol: adding to and retrieving from shared storage.

- LinkedIn is free to ignore the */accepted tags from any application if it chooses.

- Tim can withdraw permission for a resolver to work on his behalf, simply by taking away that application’s permission to read tim/friend-request/linkedin tags.

- Tim can stop LinkedIn from creating friend resolution tags by removing the linkedin.com user’s permission to add the tim/friend-request/linkedin tag to objects. That would be somewhat extreme, seeing as LinkedIn is likely to offer its users a simpler way for people to turn off the feature, but it’s worth pointing out that Tim has control.

- To those who don’t have permission to read tim/friend-request/linkedin tags, there is no way to see who has made the friend request. (The fact that Tim is the target could also be easily obscured, if wanted.)

- All communication is convention-based and asynchronous. This resembles the way we (and other organisms), often communicate in natural systems. I suspect most information communication between living organisms is asynchronous, though I have no way to quantify this. Asynchronous communication via conventions in shared storage (e.g., those seen in Twitter with hashtags and @addressing) is so powerful because it is open-ended and evolutionary. Fit conventions (in the biological sense) will flourish. Conventions can be extended by any player, without harm. I wrote more on this in Dancing out of time: Thoughts on asynchronous communication.

Note that the above is just an example of how applications can communicate indirectly and asynchronously through shared storage using evolving conventions instead of using direct, synchronous, predefined API calls between one another. We have seen a solution to a difficult address book problem that has not involved writing an address book application. Instead, the problem is solved by a set of lightweight and loosely coupled cooperating applications communicating through data. I have (very slowly!) come to realize that this form of inter-application communication is an important part of what Fluidinfo makes possible. This is all enabled by the simple move to shared writable storage, coupled with a flexible permissions model and a query language.

Thanks for reading. I really hope you’ll find this as interesting as I do. Thanks to Nicholas Radcliffe, Tim O’Reilly, and Bar Shirtcliff for comments that greatly improved the above.