We’d like to introduce you to “Flimp” (the FLuiddb IMPorter) – a tool that makes it easy to import data into FluidDB.

It works in two ways:

- Given a source file containing a data dump (in either json, yaml or csv format), Flimp will create the necessary FluidDB namespaces and tags and then import the records. (We expect to provide more file formats soon.)

- Given a filesystem path, Flimp will create the necessary FluidDB namespaces (based on directories) and tags (based on file names) and then import file contents as values tagged on a single FluidDB object.

Flimp can be configured to do custom pre-processing (e.g. cleaning, normalizing or modifying) before data is imported into FluidDB. It’s important to note that Flimp is in active development and that we welcome comments, ideas, and bug reports. Flimp is built on fom (the Fluid Object Mapper) created by my colleague Ali Afshar.

As a test, we’ve imported all the metadata from data.gov and data.gov.uk using Flimp and made it publicly readable. The rest of this article explains exactly how we did it so you can also start importing data into FluidDB using Flimp.

Open Government Data

source: http://www.flickr.com/photos/opensourceway/4371001268/

Governments are making their data openly available to citizens. This has resulted in a tidal wave of hitherto unavailable information flowing onto the Internet.

Unfortunately, it’s very easy to be swamped by both the sheer amount and diversity of what is available. Furthermore, despite progress in this area, it is still difficult to search and explore the data. Plus, governments publish data in many different ways making it difficult to link, annotate and search datasets.

Both the US and UK government data sites provide a dump of their metadata (data describing the data they have available). Finding this invaluable information is hard, so for the record here’s a link to the US dump and here’s a link to the UK dump. These are the sources Flimp imported into FluidDB. No doubt there are more from other governments and when found they’ll also mysteriously find their way into FluidDB.

Get Flimp

Flimp is written in the Python programming language. You’ll need to have this installed first along with setuptools. Once you have these requirements there are two ways to get Flimp:

- If you want the latest and greatest “bleeding edge” version then go visit the project’s website and follow the appropriate links/instructions.

- If you’d rather use the current packaged stable release then follow the instructions below. The rest of this article deals with Flimp version 0.6.1.

To install the latest stable release open a terminal and issue the following commands (Flimp depends on fom and PyYaml):

$ easy_install fom

$ easy_install PyYaml

$ easy_install flimp

Once installed you can check Flimp has installed correctly by using the “flimp” command like this:

$ flimp --version

flimp 0.6.1

That’s it! You have both the “flimp” command line tool installed and the associated libraries used for importing data into FluidDB.

Help is always available via the command line tool:

$ flimp --help

Usage: flimp [options]

Options:

--version show program's version number and exit

-h, --help show this help message and exit

-f FILE, --file=FILE The FILE to process (valid filetypes: .json, .csv,

.yaml)

-d DIRECTORY, --dir=DIRECTORY

The root directory for a filesystem import into

FluidDB

-u UUID, --uuid=UUID The uuid of the object to which the filesystem import

is to attach its tags

-a ABOUT, --about=ABOUT

The about value of the object to which the filesystem

import is to attach its tags

-p, --preview Show a preview of what will happen, don't import

anything

-i INSTANCE, --instance=INSTANCE

The URI for the instance of FluidDB to use

-l LOG, --log=LOG The log file to write to (defaults to flimp.log)

-v, --verbose Display status messages to console

-c, --check Validate the data file containing the data to import

into FluidDB - don't import anything

Importing from data.gov.uk

First, we registered the user “data.gov.uk”. Because we’ll be using tags only associated with the data.gov.uk user you can be sure that the source of the data is legitimate. (We’d love this user to be under the control of someone from data.gov.uk – contact us if this applies to you.)

Next, we downloaded a json dump of the UK’s metadata. A quick look at the raw file indicated that it was already in a remarkably good state but we wanted to make sure. Flimp helps out:

$ flimp --file=uk_data_dump.json --check

Working... (this might take some time, why not: tail -f the log?)

The following MISSING fields were found:

geographical_granularity

temporal_coverage-from

temporal_coverage_to

geographic_granularity

temporal_coverage_from

taxonomy_url

import_source

temporal_coverage-to

Full details in the missing.json file

Flimp uses the first item in the json dump as a template for the schema. The “–check” flag tells Flimp to make sure all the items match the schema. In this case we notice that some items don’t have all the fields. This isn’t a problem and if we were to open the “missing.json” file then we’d see which items these are. Importantly, Flimp also checks if any of the items have extra fields associated with them. This would be more of an issue but Flimp would help by giving details of the problem items allowing you to rectify the problem.

It is also possible to preview what Flimp would do when importing the data:

$ flimp --file=uk_data_dump.json --preview

FluidDB username: data.gov.uk

FluidDB password:

Absolute Namespace path (under which imported namespaces and tags will be created): data.gov.uk/meta

Name of dataset (defaults to filename) [uk_data_dump]: data.gov.uk:metadata

Key field for about tag value (if none given, will use anonymous objects): id

Description of the dataset: Metadata from data.gov.uk

Working... (this might take some time, why not: tail -f the log?)

Preview of processing 'uk_data_dump.json'

The following namespaces/tags will be generated.

data.gov.uk/meta/relationships

data.gov.uk/meta/ratings_average

data.gov.uk/meta/maintainer

data.gov.uk/meta/name

data.gov.uk/meta/license

data.gov.uk/meta/author

data.gov.uk/meta/url

data.gov.uk/meta/notes

data.gov.uk/meta/title

data.gov.uk/meta/maintainer_email

data.gov.uk/meta/author_email

data.gov.uk/meta/state

data.gov.uk/meta/version

data.gov.uk/meta/resources

data.gov.uk/meta/groups

data.gov.uk/meta/ratings_count

data.gov.uk/meta/license_id

data.gov.uk/meta/revision_id

data.gov.uk/meta/id

data.gov.uk/meta/tags

data.gov.uk/meta/extras/national_statistic

data.gov.uk/meta/extras/geographic_coverage

data.gov.uk/meta/extras/geographical_granularity

data.gov.uk/meta/extras/external_reference

data.gov.uk/meta/extras/temporal_coverage-from

data.gov.uk/meta/extras/temporal_granularity

data.gov.uk/meta/extras/date_updated

data.gov.uk/meta/extras/agency

data.gov.uk/meta/extras/precision

data.gov.uk/meta/extras/geographic_granularity

data.gov.uk/meta/extras/temporal_coverage_to

data.gov.uk/meta/extras/temporal_coverage_from

data.gov.uk/meta/extras/taxonomy_url

data.gov.uk/meta/extras/import_source

data.gov.uk/meta/extras/temporal_coverage-to

data.gov.uk/meta/extras/department

data.gov.uk/meta/extras/update_frequency

data.gov.uk/meta/extras/date_released

data.gov.uk/meta/extras/categories

4023 records will be imported into FluidDB

The “–preview” flag does exactly what you’d expect: it asks you the same questions as if you were importing the data for real but instead lists the new namespace/tag combinations that will be created and the number of new objects to be annotated.

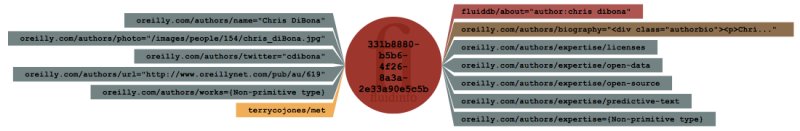

It’s important to understand how Flimp generates the “about” tag value (unsurprisingly, the about tag value indicates what each object in FluidDB is about). It needs to be unique and descriptive of what the object represents. As a result Flimp asks you to identify a field in your data containing unique values and appends this to the end of the name of the dataset (in the example above, “id” was identified as the key field):

fluiddb/about = "data.gov.uk:1ea4bfa9-9ae1-4be0-ae73-e0c4a26caa6c"

If you don’t provide a field for unique values Flimp simply generates a new object without an associated “about” value.

Nicholas Radcliffe’s About Tag blog is a great source of further information about the emerging conventions surrounding the “about” tag.

Since Flimp has satisfied us that the json data was in a good state we simply issued the following command to start the actual import:

$ flimp --file=uk_data_dump.json

FluidDB username: data.gov.uk

FluidDB password:

Absolute Namespace path (under which imported namespaces and tags will be created): data.gov.uk/meta

Name of dataset (defaults to filename) [uk_data_dump]: data.gov.uk:metadata

Key field for about tag value (if none given, will use anonymous objects): id

Description of the dataset: Metadata from data.gov.uk

Working... (this might take some time, why not: tail -f the log?)

Notice how Flimp interrogates you for sensitive information so you don’t have to have username/password credentials stored in a configuration file.

After the import completed it left a record of exactly what it did in the “flimp.log” file located in the current directory.

Importing from data.gov

Just as with the UK data, we’ve used an appropriate FluidDB username for importing the US data: data.gov (and the same applies – the data.gov user should be under the control of someone from data.gov – please contact us if this applies to you).

We took a different approach to the US metadata. They provide either an rdf document or a csv file. Since Flimp understands csv we used this as the source.

We wanted to make sure that the headers in the csv file (which get transformed into the names of tags in FluidDB) were cleaned and normalized appropriately since they contained lots of whitespace and non-alphanumeric characters. The snippet of Python code below demonstrates how we re-used Flimp in our own import script to achieve this end.

from flimp.utils import process_data_list

from flimp.parser import parse_csv

from fom.session import Fluid

def clean_header(header):

"""

A function that takes a column header and normalises / cleans it into

something we'll use as the name of a tag

"""

# remove leading/trailing whitespace, replace inline whitespace with

# underscore and any slashes with dashes.

return header.strip().replace(' ', '_').replace('/', '-')

csv_file = open("data_gov.csv", "r")

data = parse_csv.parse(csv_file, clean_header)

# data now contains the normalized input from the csv file

# Use fom to create a session with FluidDB - remember flimp uses fom for

# connecting to FluidDB

fdb = Fluid() # defines a session with FluidDB

fdb.login('data.gov', 'secretpassword123') # replace these with something that works

fdb.bind()

root_path = 'data.gov/meta'# Namespace where imported namespaces/tags are created

name = 'data.gov:metadata' # used when creating namespace/tag descriptions

desc = 'Metadata from data.gov' # a description of the dataset

about = 'URL' # field whose value to use for the about tag

# the following function call imports the data

result = process_data_list(data, root_path, name, desc, about)

print result

Conclusion

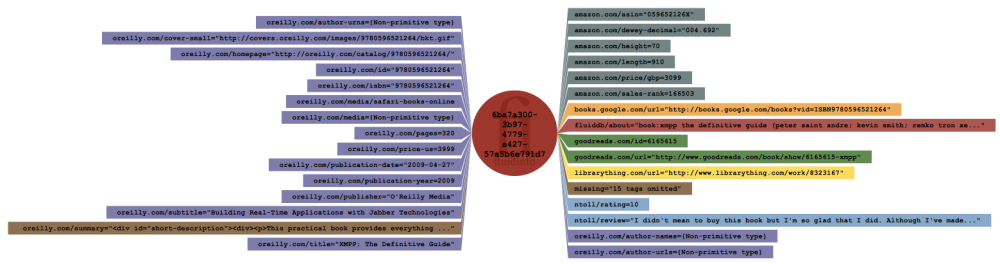

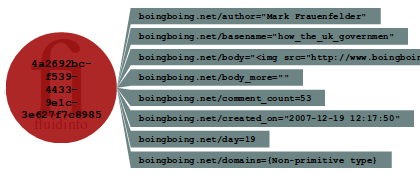

By importing the metadata into FluidDB we immediately gain the following:

- FluidDB’s consistent, simple and elegant RESTful API as a view into the data.

- The possibility of simple yet powerful queries across all the metadata.

- The opportunity to annotate, link and augment the existing data with contributions from other sources.

Any application can now access the newly imported government data. In a future post I’ll demonstrate how to build a web-based interface for this data that is also hosted within FluidDB. I’ll also show how to query, annotate and link data yourself and re-use the contributions of others.