Surely that can only mean one thing: Tornado is based on Twisted. Right?

Incredibly, no. Words fail me on this one. I’ve spent some hours today trying to put my thoughts into order so I could put together a reasonably coherent blog post on the subject. But I’ve failed. So here are some unstructured thoughts.

First of all, I’m not meaning to bash Facebook on this. At Fluidinfo we use their Thrift code. We’ll almost certainly use Scribe for logging at some point, and we’re seriously considering using Cassandra. Esteve Fernandez has put a ton of work into txAMQP to glue together Thrift, Twisted, and AMQP, and in the process became a Thrift committer.

Second, you can understand—or make an educated guess at—what happened: the Facebook programmers, like programmers everywhere, were strongly tempted to just write their own code instead of dealing with someone else’s. It’s not just about learning curves and fixing deficiencies, there are also issues of speed of changes and of control. At Fluidinfo we suffered through six months of maintaining our own set of related patches to Thrift before the Twisted support Esteve wrote was finally merged to trunk. That was painful and the temptation to scratch our own itch, fork, and forget about the official Thrift project was high.

Plus, Twisted suffers from the fact that the documentation is not as good as it could be. I commented at length on this over three years ago. Please read the follow-up posts in that thread for an illustration (one of many) of the maturity of the people running Twisted. Also note that the documentation has improved greatly since then. Nevertheless, Twisted is a huge project, it has tons of parts, and it’s difficult to document and to wrap your head around no matter what.

So you can understand why Facebook might have decided not to use Twisted. In their words:

We ended up writing our own web server and framework after looking at existing servers and tools like Twisted because none matched both our performance requirements and our ease-of-use requirements.

I’m betting it’s that last part that’s the key to the decision not to use Twisted.

But seriously…… WTF?

Twisted is an amazing piece of work, written by some truly brilliant coders, with huge experience doing exactly what Facebook set out to reinvent.

This is where I’m at a loss for words. I think: “what an historic missed opportunity” and “reinventing the wheel, badly” and “no, no, no, this cannot be” and “this is just so short-sighted” and “a crying shame” and many things besides.

Sure, Twisted is difficult to grok. But that’s no excuse. It’s a seriously cool and powerful framework, it’s vastly more sophisticated and useful and extensible than what Facebook have cobbled together. Facebook could have worked to improve twisted.web (which everyone agrees has some shortcomings) which could have benefitted greatly from even a small fraction of the resources Facebook must have put into Tornado. The end result would have been much better. Or Facebook could have just ignored twisted.web and built directly on top of the Twisted core. That would have been great too.

Or Facebook could have had a team of people who knew how to do it better, and produced something better than Twisted. I guess that’s the real frustration here – they’ve put a ton of effort into building something much more limited in scope and vision, and even the piece that they did build looks like a total hack built to scratch their short term needs.

What’s the biggest change in software engineering over the last decade? Arguably it’s the rise of test-driven development. I’m not the only one who thinks so. Yet here we are in late 2009 and Facebook have released a major piece of code with no test suite. Amazing. OK, you could argue this is a minor thing, that it’s not core to Tornado. That argument has some weight, but it’s hard to think that this thing is not a hack.

If you decide to use an asynchronous web framework, do you expect to have to manually set your sockets to be non-blocking? Do you feel like catching EWOULDBLOCK and EAGAIN yourself? Those sorts of things, and their non-portability (even within the world of Linux) are precisely the kinds of things that lead people away from writing C and towards doing rapid development using something robust and portable that looks after the details. They’re precisely the things Twisted takes care of for you, and which (at least in Twisted) work across platforms, including Windows.

It looks like Tornado are using a global reactor, which the Twisted folks have learned the hard way is not the best solution.

Those are just some of the complaints I’ve heard and seen in the Tornado code. I confess I’ve looked only superficially at their code – but more than enough to feel such a sense of lost opportunity. They built a small subsection of Twisted, they’ve done it with much less experience and elegance and hiding of detail than the Twisted code, and the thing doesn’t even come with a test suite. Who knows if it actually works, or when, or where, etc.?

And…. Twisted is so much more. HTTP is just one of many protocols Twisted speaks, including (from their home page): “TCP, UDP, SSL/TLS, multicast, Unix sockets, a large number of protocols including HTTP, NNTP, IMAP, SSH, IRC, FTP, and others”.

Want to build a sophisticated, powerful, and flexible asynchronous internet service in Python? Use Twisted.

A beautiful thing about Twisted is that it expands your mind. Its abstractions (particularly the clean separation and generality of transports, protocols, factories, services, and Deferreds—see here and here and here) makes you a better programmer. As I wrote to some friends in April 2006: “Reading the Twisted docs makes me feel like my brain is growing new muscles.”

Twisted’s deferreds are extraordinarily elegant and powerful, I’ve blogged and posted to the Twisted mailing list about them on multiple occasions. Learning to think in the Twisted way has been a programming joy to me over the last 3 years, so you can perhaps imagine my dismay that a company with the resources of Facebook couldn’t be bothered to get behind it and had to go reinvent the wheel, and do it so poorly. What a pity.

In my case, I threw away an entire year of C code in order to use Twisted in FluidDB. That was a decision I didn’t take lightly. I’d written my own libraries to do lots of low level network communications and RPC – including auto-generating server and client side glue libraries to serialize and unserialize RPC calls and args (a bit like Thrift), plus a server and tons of other stuff. I chucked it because it was too brittle. It was too much of a hack. It wasn’t portable enough. It was too get the details right. It wasn’t extensible.

In other words….. it was too much like Tornado! So I threw it all away in favor of Twisted. As I happily tell people, FluidDB is written in Python so it can use Twisted. It was a question of an amazingly great asynchronous networking framework determining the choice of programming language. And this was done in spite of the fact that I thought the Twisted docs sucked badly. The people behind Twisted were clearly brilliant and the community was great. There was an opportunity to make a bet on something and to contribute. I wish Facebook had made the same decision. It’s everyone’s loss that they did not. What a great pity.

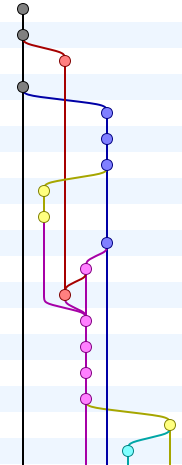

I’ve been playing with Keynote to make a presentation. There are a lot of things I don’t really like about using a Mac, but Keynote is not one of them.

I’ve been playing with Keynote to make a presentation. There are a lot of things I don’t really like about using a Mac, but Keynote is not one of them.

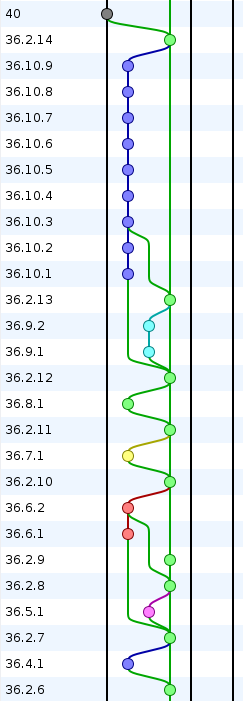

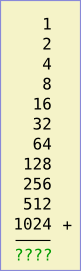

On the left is an addition problem. If you know the answer without thinking, you’re probably a geek.

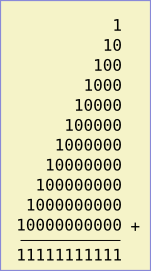

On the left is an addition problem. If you know the answer without thinking, you’re probably a geek. Let’s instead think about the problem in binary (i.e., base 2). In binary, the sum looks like the image on the right.

Let’s instead think about the problem in binary (i.e., base 2). In binary, the sum looks like the image on the right.