In my last posting I argued that we should drop all talk about Artificial Intelligence when discussing the semantic web, web 3.0, etc., and acknowledge that in fact it’s all about data. There are two points in that statement. I was scratching an itch and so I only argued one of them. So what about my other claim?

While I’m not ready to describe what my company is doing, there’s a lot I can say about why I claim that data is the important thing.

Suppose something crops up in the non-computational “real-world” and you decide to use a computer to help address the situation. An inevitable task is to take the real-world situation and somehow get it into the computational system so the computer can act on it. Thus one of the very first tasks we face when deciding to use a computer is one of representation. Given information in the real world, we must choose how to represent it as data in a computer. (And it always is a choice.)

So when I say that data is important, I’m mainly referring to information representation. In my opinion, representation is the unacknowledged cornerstone of problem solving and algorithms. It’s fundamentally important and yet it’s widely ignored.

When computer scientists and others talk about problem solving and algorithms, they usually ignore representation. Even in the genetic algorithms community, in which representation is obviously needed and is a required explicit choice, the subject receives little attention. But if you think about it, in choosing a representation you have already begun to solve the problem. In other words, representation choice is a part of problem solving. But it’s never talked about as being part of a problem-solving algorithm. In fact though, if you choose your representation carefully the rest of the problem may disappear or become so trivial that it can be solved quickly by exhaustive search. Representation can be everything.

To illustrate why, here are a couple of examples.

Example 1. Suppose I ask you to use a computer to find two positive integers that have a sum of 15 and a product of 56. First, let’s pick some representation of a positive integer. How about a 512-bit binary string for each integer? That should cover it, I guess. We’ll have two of them, so that will be 1,024 bits in our representation. And here’s an algorithm, more or less: repeatedly set the 1,024 bits at random, add the corresponding integer values, to see if they sum to 15. If so, multiply them and check the product too.

But wait, wait, wait… even my 7-year-old could tell you that’s not a sensible approach. It will work, eventually. The state search space has 21024 candidate solutions. Even if we test a billion billion billion of them per second, it’s going to take much longer than a billion years.

Instead, we could think a little about representation before considering what would classically be called the algorithm. Aha! It turns out we could actually represent each integer using just 4 bits, without risk of missing the solution. Then we can use our random (or an exhaustive) search algorithm, and have the answer in about a billionth of a second. Wow.

Of course this is a deliberately extreme example. But think about what just happened. The problem and the algorithm are the same in both of the above approaches. The only thing that changed was the representation. We coupled the stupidest possible algorithm with a good representation and the problem became trivial.

Example 2. Consider the famous Eight Queens problem (8QP). That’s considerably harder than the above problem. Or is it?

Let’s represent a chess board in the computer using a 64-bit string, and make sure that exactly 8 bits are set to one to indicate the presence of a queen. We’ll devise a clever algorithm for coming up with candidate 64-bit solutions, and write code to check them for correctness. But the search space is 264, and that’s not a small number. It could easily take a year to run through that space, so the algorithm had better be pretty good!

But wait. If you put a queen in row R and column C, no other queen can be in row R or column C. Following that line of thinking, you can see that all possibly valid solutions can be represented by a permutation of the numbers 1 through 8. The first number in the permutation gives the column of the queen in the first row, and so on. There are only 8! = 40,320 possible arrangements that need to be checked. That’s a tiny number. We could program it up, use exhaustive search as our algorithm, and have a solution in well under a second!

Once again, a change of representation has a radical impact on what people would normally think of as the problem. But the problem isn’t changing at all. What’s happening is that when you choose a representation you have actually already begun to solve the problem. In fact, as the examples show, if you get the representation right enough the “problem” pretty much vanishes.

These are just two simple examples. There are many others. You may not be ready to generalize from them, but I am.

I think fundamental advances based almost solely on improved representation lie just ahead of us.

I think that If we adopt a better representation of information, things that currently look impossible may even cease to look like problems.

There are other people who seem to believe this too, though perhaps implicitly. Web 3.0, whatever that is, can bring major advances without anyone needing to come up with new algorithms. Given a better representation we could even use dumb algorithms (though perhaps not pessimal algorithms) and yet do things that we can’t do with “smart” ones. I think this is the realization, justifiably exciting, that underlies the often vague talk of “web 3.0″, the “read/write web”, the “data web”, “data browsing”, the infinite possible futures of mash ups, etc.

This is why, to pick the most obvious target, I am certain that Google is not the last word in search. It’s probably not a smart idea to try to be smarter than Google. But if you build a computational system with a better underlying representation of information you may not need to be particularly intelligent at all. Things that some might think are related to “intelligence”, including the emergence of a sexy new “semantic” web, may not need much more than improved representation.

Give a 4-year-old a book with a 90%-obscured picture of a tiger in the jungle. Ask them what they see. Almost instantly they see the tiger. It seems incredible. Is the child solving a problem? Does the brain or the visual system use some fantastic algorithm that we’ve not yet discovered? Above I’ve given examples of how better representation can turn things that a priori seemed to require problem solving and algorithms into things that are actually trivial. We can extend the argument to intelligence. I suspect it’s easy to mistake someone with a good representation and a dumb algorithm as being somehow intelligent.

I bet that evolution has produced a representation of information in the brain that makes some problems (like visual pattern matching) non-existent. I.e., not problems at all. I bet that there’s basically no problem solving going on at all in some things people are tempted to think of as needing intelligence. The “algorithm”, and I hesitate to use that word, might be as simple as a form of (chemical) hill climbing, or something even more mundane. Perhaps everything we delight in romantically ascribing to native “intelligence” is really just a matter of representation.

That’s why I believe data (aka information representation) is so extremely important. That’s where we’re heading. It’s why I’m doing what I’m doing.

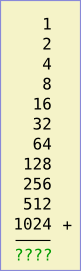

On the left is an addition problem. If you know the answer without thinking, you’re probably a geek.

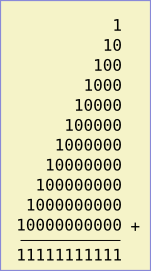

On the left is an addition problem. If you know the answer without thinking, you’re probably a geek. Let’s instead think about the problem in binary (i.e., base 2). In binary, the sum looks like the image on the right.

Let’s instead think about the problem in binary (i.e., base 2). In binary, the sum looks like the image on the right.